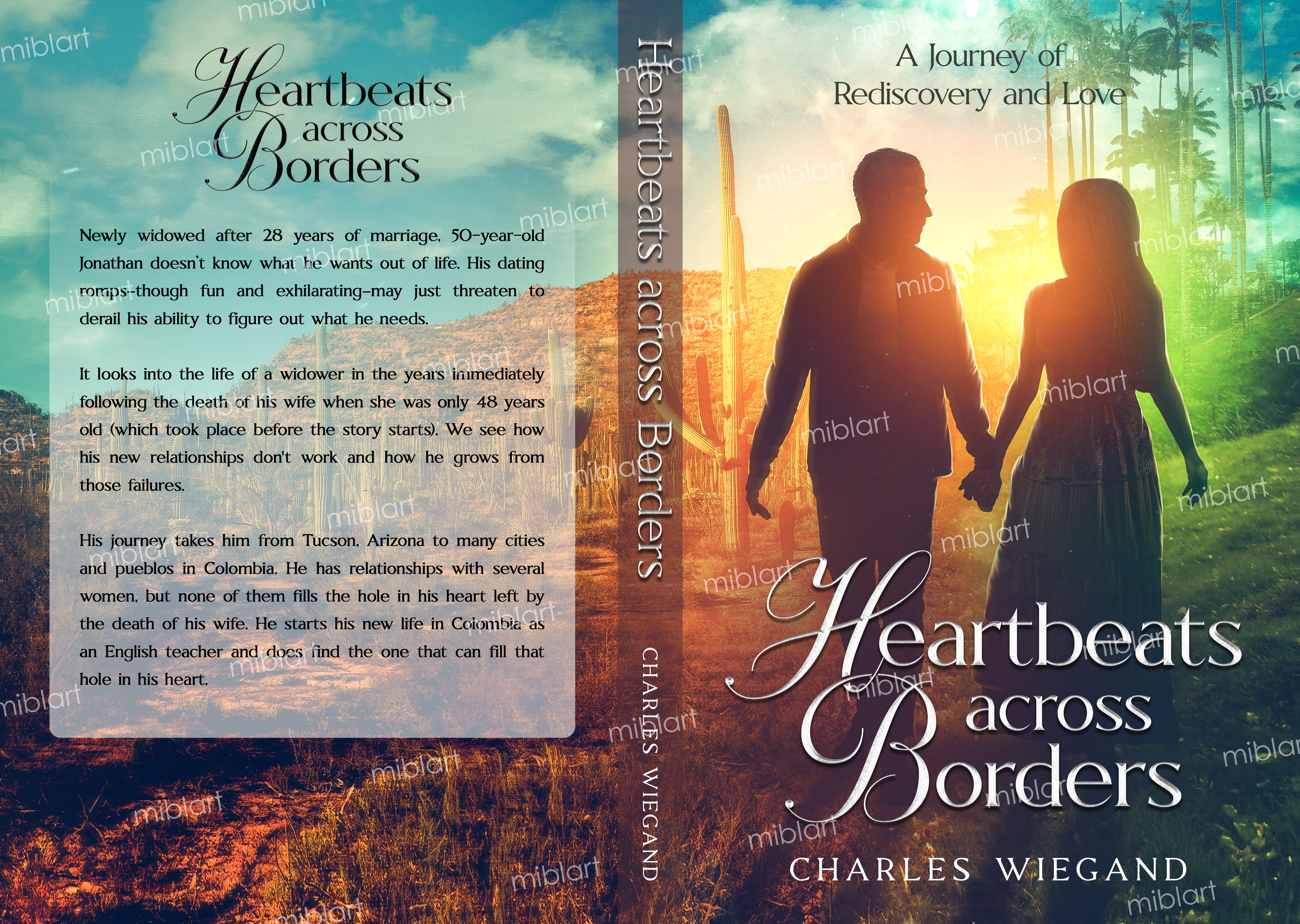

My book "Heartbeats Across Borders" is available now on Amazon.com! Be sure to get your copy! And sign up for my mailing list.

A Collection of Short Stories

Two hearts, two countries, one love

April 2, 2025

It’s 2025. We carry AI in our pockets, stream 8K video on demand, and talk to chatbots that can quote Shakespeare, debug code, and flirt all in the same breath. We’ve mapped the human genome, landed rovers on Mars, and built quantum computers in glass-walled labs. And yet, here we are—still shackled to the same CPU structure we had 20, 30, even 50 years ago.

Why? Because convenience rules.

Let’s back up a second.

The Illusion of Multitasking

Most people think modern computers can multitask, and in a way, they do. But it’s sleight of hand. A single-core CPU still only handles one instruction at a time. Preemptive multitasking just switches between tasks so fast it feels simultaneous. It’s like a juggler with lightning reflexes—he only touches one ball at a time, but he makes you think they’re all floating.

Throw in multi-core CPUs and things get more interesting. Now you can actually run multiple tasks in parallel. But even here, each core is doing just one thing at a time. We’re not multitasking; we’re task-juggling with more hands.

So What About True Multitasking?

You might ask: Are there any systems that actually perform true multitasking—where a single unit handles multiple, simultaneous instruction streams without switching?

Short answer: Nope. Not yet.

Even in the world of supercomputers and AI, we’re still fundamentally tied to the old Von Neumann model: fetch, decode, execute. One instruction per core, per cycle. Everything else—threads, processes, hyper-threading, task scheduling—is smoke, mirrors, and clever time management.

But that doesn’t mean we haven’t tried to evolve.

The Exotic Alternatives

There are promising new computing paradigms out there. They just haven’t broken through yet. Why? Well, let’s talk about a few of them:

Neuromorphic Computing mimics the brain’s neurons and synapses. Systems like Intel’s Loihi or IBM’s TrueNorth fire virtual neurons only when triggered, enabling massive parallelism. Not multitasking like we know it—but arguably closer to how our minds work.

-Dataflow Architectures don’t follow a single instruction stream. Instructions execute as soon as their input data is ready. It’s reactive, not linear. It’s powerful. It’s... still niche.

-Photonic Computing replaces electrons with light. This could mean parallel processing with no heat and near-zero latency. Sounds amazing. Still mostly in prototype land.

-Quantum Computing uses qubits to perform many calculations simultaneously, thanks to superposition and entanglement. But it’s not multitasking in the conventional sense—it’s probabilistic magic for very specific types of problems, not running your calendar app and browser at once.

So Why Are We Still Stuck?

Because changing architecture means changing everything. New compilers. New operating systems. New developer tools. Retraining the workforce. Rethinking the entire software ecosystem. You don’t just replace the CPU. You tear out the foundation of the digital world.

And for what? Slightly better performance in edge-case scenarios? The business case doesn’t sell. Not yet.

Faster Horses

Remember that apocryphal quote attributed to Henry Ford? "If I had asked people what they wanted, they would have said faster horses." That’s what we’re doing with CPUs. Faster, smaller, cooler, cheaper. We haven’t reinvented the wheel; we’ve just made it spin faster.

In the end, convenience beats innovation every time. The CPU still "works." Our OSes still expect Von Neumann logic. Our developers are fluent in old paradigms. Radical change rarely wins, not because it's wrong, but because it's inconvenient.

Innovation that succeeds is usually just revolution disguised as evolution.

Final Thought

We might someday build machines that multitask the way we imagine—truly and literally. But if we ever do, they won't just need new hardware. They’ll need a new world to plug into. Until then, we’ll keep polishing the old models, pretending we’re running marathons on hoverboards, when really, we’re just running faster in slightly cooler shoes.

And maybe that’s okay. For now.

But don’t stop asking, "Why are we still doing it this way?" Because that’s how revolutions begin.